Novel Deep Learning Approach Allows Rapid Analysis of Visual Disaster Data

Published on July 30, 2018

Credit: Chul Min Yeum, Purdue University

In the aftermath of a natural disaster, engineers arrive on the scene to document the consequences of the event on buildings, bridges, roads and pipelines. Aiming to learn from structural failures, reconnaissance teams take around 10,000 photos each day, moving quickly to capture crucial data before they are destroyed. Then, every image must be analyzed, sorted and described tasks that take people a tremendous amount of time.

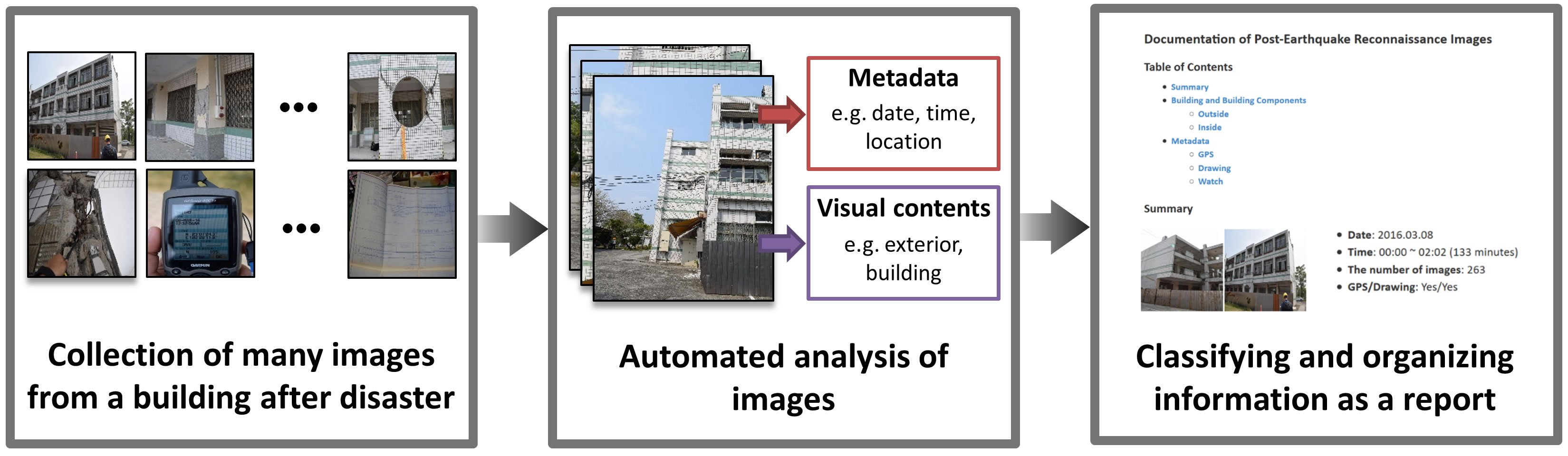

In the first-ever implementation of deep learning for automating reconnaissance image analysis, Purdue University civil engineering researchers Shirley Dyke and Chul Min Yeum have created a way for computers to analyze visual data describing damage from natural hazards faster and more consistently than humans. Specifically, the Purdue team has deployed deep learning algorithms to develop an online tool that automatically classifies images collected after disasters, a tool that directly supports field teams as they gather important perishable data.

Deep learning refers to artificial neural network algorithms that use numerous layers of computations to analyze specific problems. Researchers integrate domain knowledge to train these algorithms to recognize scenes and locate objects in images. Once each image is automatically analyzed, these complex, unstructured image sets can be organized into a format helpful to field teams in just a couple of minutes, saving them a great deal of time.

Purdue researchers Shirley Dyke and Chul Min Yeum train their algorithms to understand and identify aspects of damage imagery that are important for natural hazard damage reconnaissance. (Photo: Mark Simons, Purdue University)

To develop suitable algorithms for classifying post-disaster imagery, Dyke and Yeum started with an unstructured 8,000-image data set from several past hazard events. They designed suitable classes for engineering purposes, and they carefully assigned labels to photographs for instance, showing building components that were either collapsed or not collapsed, and areas affected by spalling, where concrete chips off structural elements due to large tensile deformations. After training is completed, the algorithm can automatically classify images and organize them for use by the field teams.

"Deep learning algorithms are quite powerful, but domain expertise is an essential ingredient needed to successfully perform the fundamental research to address their use for building image classification," Yeum says.

The team has gathered and trained their algorithms on about 140,000 digital images, including those from recent earthquakes in Nepal, Chile, Taiwan and Turkey.

Using deep learning techniques, the Purdue team has created an online visual data analysis tool called the Automated Reconnaissance Image Organizer, or ARIO. In the field or after a reconnaissance mission, researchers can upload images to ARIO where they are automatically analyzed and organized into a sharable online report to support real-world disaster reconnaissance missions. A prototype is available for testing and initial use by field teams.

"Often, design codes for buildings are based on lessons derived from these data," Dyke says. "If these data could be organized more quickly, more high-quality data can be gathered and used, leading to safer infrastructure and more resilient communities."

The team includes the computational expertise of Bedrich Benes and PhD student Mathieu Gaillard in Purdues Department of Computer Graphics Technology, and Tom Hacker, in the Department of Computer and Information Technology. The team works closely with Santiago Pujol, who, as director for the Center for Earthquake Engineering and Disaster Data, surveys and collects damage from major disasters around the world.

Contact: Shirley Dyke, sdyke@purdue.edu

Award #1608762 CDS&E: Enabling Time-critical Decision-support for Disaster Response and Structural Engineering through Automated Visual Data Analytics, July 15, 2016 June 30, 2019 (estimated).